Abstract

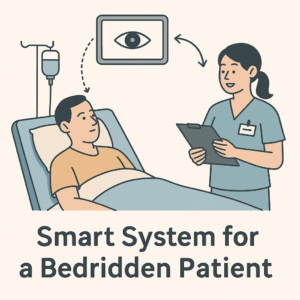

The aim of this project is to develop an intelligent, hands-free control system for wheelchairs using eye gaze direction. This system leverages computer vision and machine learning to detect and classify eye movements—such as looking left, right, up, down, or steady—into specific directional commands. Using a webcam and facial landmark detection (via Dlib and Haar cascades), the system isolates eye regions and extracts Histogram of Oriented Gradients (HOG) features for real-time classification using a K-Nearest Neighbors (KNN) algorithm.

The interface is designed using PyQt5, offering an intuitive GUI to train models, capture datasets, and monitor detection. Additionally, the system includes optional serial communication to send movement commands to external hardware, such as a microcontroller-controlled wheelchair. This project aims to enhance mobility for people with physical disabilities, providing a low-cost, non-invasive alternative to traditional control methods.

![EYE CONTROLLED-WHEEL-CHAIR [software only]](https://icruxlabs.com/wp-content/uploads/2025/04/eye-control-wheelchair.png)

![EYE CONTROLLED-WHEEL-CHAIR [software only] - Image 2](https://icruxlabs.com/wp-content/uploads/2025/04/vlcsnap-00010.jpg)

![EYE CONTROLLED-WHEEL-CHAIR [software only] - Image 3](https://icruxlabs.com/wp-content/uploads/2025/04/vlcsnap-00011.jpg)

![EYE CONTROLLED-WHEEL-CHAIR [software only] - Image 4](https://icruxlabs.com/wp-content/uploads/2025/04/vlcsnap-00012.jpg)

![EYE CONTROLLED-WHEEL-CHAIR [software only] - Image 5](https://icruxlabs.com/wp-content/uploads/2025/04/vlcsnap-00013.jpg)

![EYE CONTROLLED-WHEEL-CHAIR [software only] - Image 6](https://icruxlabs.com/wp-content/uploads/2025/04/vlcsnap-00014.jpg)

![EYE CONTROLLED-WHEEL-CHAIR [software only]](https://icruxlabs.com/wp-content/uploads/2025/04/eye-control-wheelchair-300x300.png)

Reviews

There are no reviews yet.